Edgar Cervantes / Android Authority

TL;DR

- Microsoft has developed a new AI tool called VASA-1 that can generate videos from a single image and audio clip.

- This technology has incredible potential for positive uses but also carries the risk of harmful manipulation.

- Microsoft insists they are approaching VASA-1 with caution, emphasizing the need for proper regulations before it’s released to the public.

Generative AI continues to reshape our digital landscape with seemingly huge strides forward every now and then, and Microsoft’s latest innovation is possibly the most groundbreaking — and unnerving — yet.

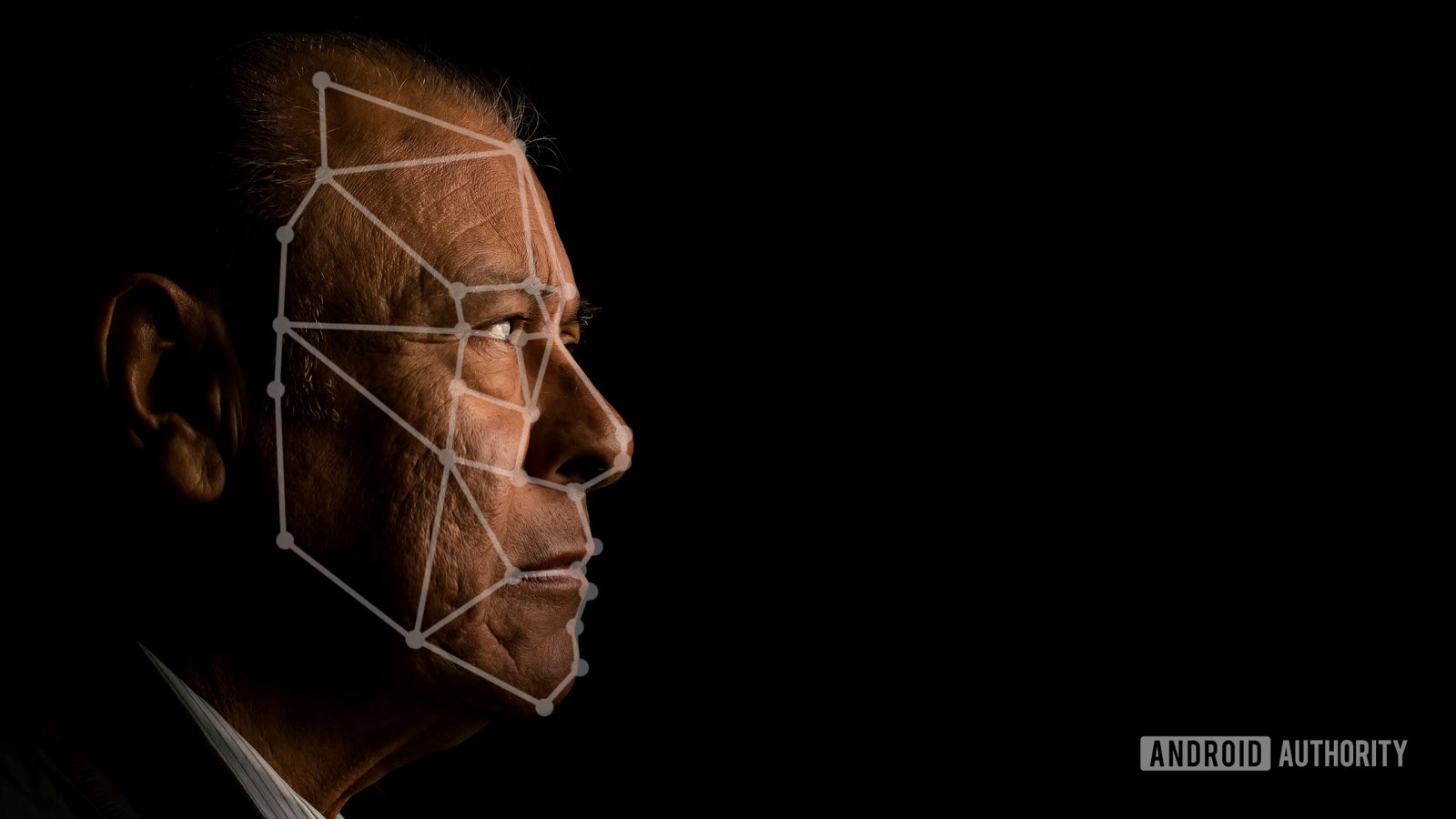

VASA-1, an image-to-video model, blurs the line between real and fabricated video. From a single image and an audio clip, it can generate shockingly realistic footage, complete with lifelike lip movements and expressions.

Microsoft is acutely aware of the technology’s power, noting that VASA-1 is “capable of not only producing precious lip-audio synchronization but also capturing a large spectrum of emotions and expressive facial nuances and natural head motions that contribute to the perception of realism and liveliness.”

The system generates high-resolution (512×512) video at an impressive 45 FPS. Even more remarkable, it can generate lifelike talking face videos at 40 FPS in real-time.

The potential applications are tantalizing. Imagine educational tools with lifelike historical figures brought to life or virtual companions offering support and therapeutic benefits. However, the potential for misuse is equally immense, immediately flagging concerns of highly convincing deepfakes capable of spreading misinformation and undermining trust.

Microsoft knows this very well and insists this is primarily a research endeavor, at least for now. The company acknowledged the inherent risks, stating: “…like other related content generation techniques, it could still potentially be misused for impersonating humans. We are opposed to any behavior to create misleading or harmful content of real persons…”

Thankfully, Microsoft maintains it won’t release this potent technology prematurely. Its plan to wait for robust regulations is reassuring and needs to become a norm for the rest of the tech industry.

The breakneck pace of innovation makes predicting the future — and the consequences of systems like VASA-1 — a daunting task. If such a tool were to be made public, would it usher in a new wave of creativity and accessibility, or would it fuel a rising tide of distrust and manipulation? Let us know your thoughts in the comments below.