Ryan Haines / Android Authority

Conventional wisdom has it that smartphone specifications don’t really matter all that much anymore. Whether you’re looking at the very best flagships or a plucky mid-ranger, they’re all more than capable of daily tasks, playing the latest mobile games, and even taking stonkingly good snaps. It’s quite difficult to find outright bad mobile hardware unless you’re scraping the absolute budget end of the market.

Case in point, consumers and pundits alike are enamored with the Pixel 8 series, even though it benchmarks well behind the iPhone 15 and other Android rivals. Similarly, Apple’s and Samsung’s latest flagships barely move the needle on camera hardware yet continue to be highly regarded for photography.

Specs simply don’t automatically equate to the best smartphone anymore. And yet, Google’s Pixel 8 series and the upcoming Samsung Galaxy S24 range have shoved their foot in that closing door. In fact, we could well be about to embark on a new specifications arm’s race. I’m talking, of course, about AI and the increasingly hot debate about the pros and cons of on-device versus cloud-based processing.

AI features are quickly making our phones even better, but many require cloud processing.

In a nutshell, running AI requests is quite different from the traditional general-purpose CPU and graphics workloads we’ve come to associate with and benchmark for across mobile, laptop, and other consumer gadgets.

For starters, machine learning (ML) models are big, requiring large pools of memory to load up even before we get to running them. Even compressed models occupy several gigabytes of RAM, giving them a bigger memory footprint than many mobile games. Secondly, running an ML model efficiently requires more unique arithmetic logic blocks than your typical CPU or GPU, as well as support for small integer number formats like INT8 and INT4. In other words, you ideally need a specialized processor to run these models in real-time.

For example, try running Stable Diffusion image generation on a powerful modern desktop-grade CPU; it takes several minutes to produce a result. OK, but that’s not useful if you want an image in a hurry. Older and lower-power CPUs, like those found in phones, just aren’t cut out for this sort of real-time work. There’s a reason why NVIDIA is in the business of selling AI accelerator cards and why flagship smartphone processors increasingly tout their AI capabilities. However, smartphones remain contained by their small power budgets and limited thermal headroom, meaning there’s a limit on what can currently be done on device.

Damien Wilde / Android Authority

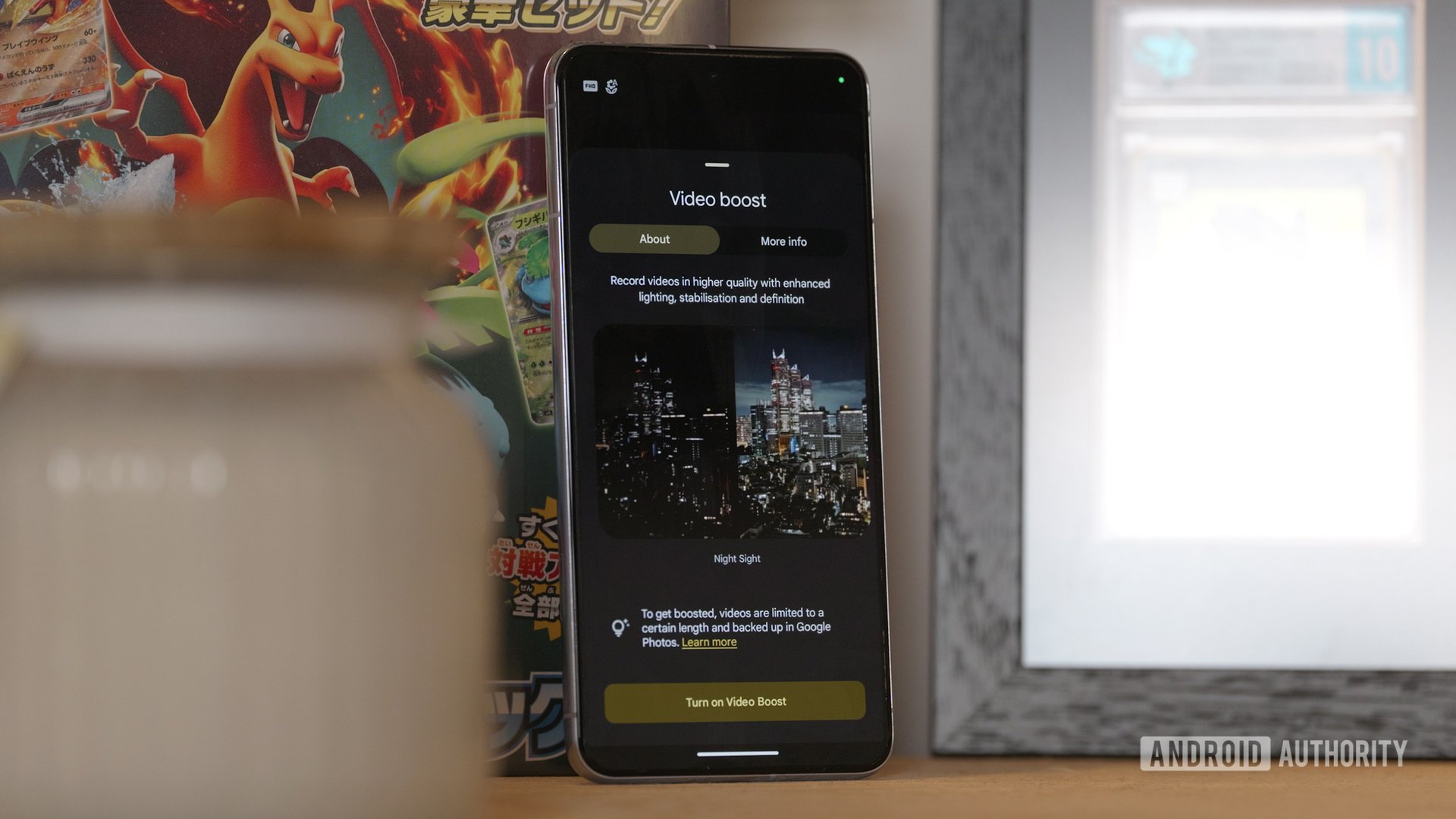

Nowhere is this more evident than in the latest Pixel and upcoming Galaxy smartphones. Both rely on new AI features to distinguish the new models from their predecessors and sport AI-accelerated processors to run useful tools, such as Call Screening and Magic Eraser, without the cloud. However, peer at the small print, and you’ll find that an internet connection is required for cloud processing for several of the more demanding AI features. Google’s Video Boost is a prime example, and Samsung has already clarified that some upcoming Galaxy AI features will run in the cloud, too.

Leveraging server power to perform tasks that can’t be done on our phones is obviously a useful tool, but there are a few limitations. The first is that these tools require an internet connection (obviously) and consume data, which might not be suitable on slow connections, limited data plans, or when roaming. Real-time language translation, for example, is no good on a connection with very high latency.

Local AI processing is more reliable and secure, but requires more advanced hardware.

Second, transmitting data, particularly personal information like your conversations or pictures, is a security risk. The big names claim to keep your data secure from third parties, but that’s never a guarantee. In addition, you’ll have to read the fine print to know if they’re using your uploads to train their algorithms further.

Third, these features could be revoked at any time. If Google decides that Video Boost is too expensive to run long-term or not popular enough to support, it could pull the plug, and a feature you bought the phone for is gone. Of course, the inverse is true: Companies can more easily add new cloud AI capabilities to devices, even those that lack strong AI hardware. So it’s not all bad.

Still, ideally, it’s faster, less expensive, and more secure to run AI tasks locally where possible. Plus, you get to keep the features for as long as the phone continues to work. On-device is better, hence why the ability to compress and run large language models, image generation, and other machine learning models on your phone is a prize that chip vendors are rushing to claim. Qualcomm’s latest flagship Snapdragon 8 Gen 3, MediaTek’s Dimensity 9300, Google’s Tensor G3, and Apple’s A17 Pro all talk a bigger AI game than previous models.

Cloud-processing is a boon for affordable phones, but they might end up left behind in the on-device arms race.

However, those are all expensive flagship chips. While AI is already here for the latest flagship phones, mid-range phones are missing out. Mainly because they lack the high-end AI silicon to run many features on-devices, and it will be many years before the best AI capabilities come to mid-range chips.

Thankfully, mid-range devices can leverage cloud processing to bypass this deficit, but we haven’t seen an indication that brands are in a hurry to push these features down the price tears yet. Google, for instance, baked the price of its cloud features into the price of the Pixel 8 Pro, but the cheaper Pixel 8 is left without many of these tools (for now). While the gap between mid-range and flagship phones for day-to-day tasks has really narrowed in recent years, there’s a growing divide in the realm of AI capabilities.

The bottom line is that if you want the latest and greatest AI tools to run on-device (and you should!), we need even more powerful smartphone silicon. Thankfully, the latest flagship chips and smartphones, like the upcoming Samsung Galaxy S24 series, allow us to run a selection of powerful AI tools on-device. This will only become more common as the AI processor arms race heats up.